Logging is big part at any orgnization and Babylon is not an exception. We have massive logging framework for compliance purpose. We process around 8K qps logs in our kubernetes clusters.

So you can imagine the scale for processing logs. Being a healthcare firm, we are pretty strict about how we log and treat sensitive data.

Coming back to the original issue, how measurable the logstash filters can be?

Developers reported that their logs are missing in centralized logging for non-production cluster and something is wrong with logstash.

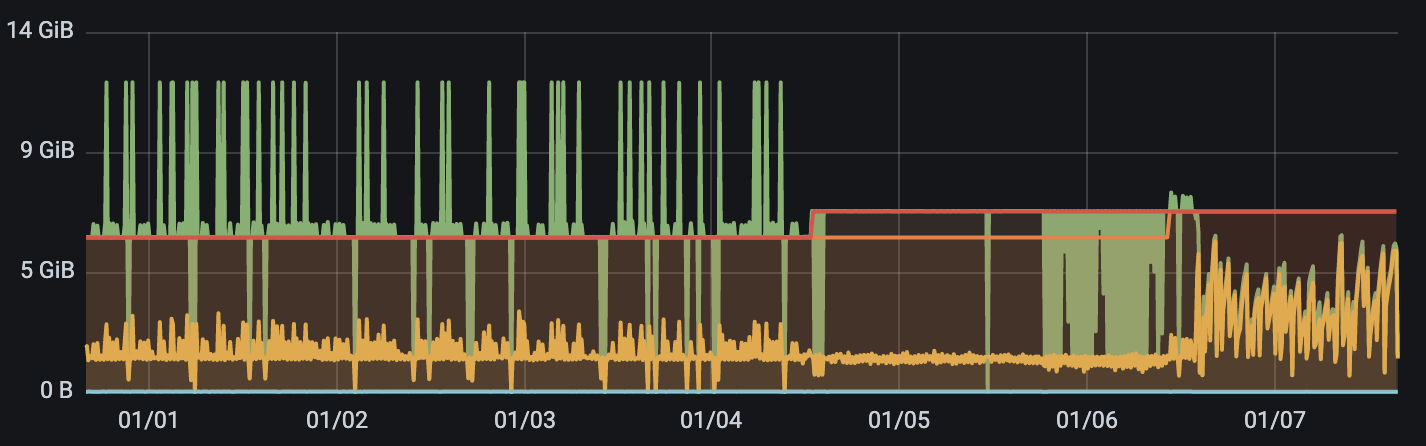

While looking at the Logstash grafana dashboard, There were heavy memory spikes hitting 14Gb bar per pod. Looking briefly at the version control to check if we had some deployment during the shutdown period, there was only log4j vulnerability fix for logstash.

The below log error caught our eyes, which was specifically configured in

truncate filter. And as it says, there is an empty attribute in the array2022-01-06T12:56:29,855][ERROR][logstash.javapipeline][output_newrelic] Pipeline worker error, the pipeline will be stopped :pipeline_id=>"output_newrelic", :error=>"(RuntimeError) Invalid FieldReference: `[access_log][request][http][query][]`", :exception=>Java::OrgJrubyExceptions::RuntimeError, :backtrace=>["org.logstash.ext.JrubyEventExtLibrary$RubyEvent.get(org/logstash/ext/JrubyEventExtLibrary.java:112)","usr.share.logstash.vendor.bundle.jruby.$2_dot_5_dot_0.gems.logstash_minus_filter_minus_truncate_minus_1_dot_0_dot_4.lib.logstash.filters.truncate.truncate(/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/logstash-filter-truncate-1.0.4/lib/logstash/filters/truncate.rb:93)", "usr.share.logstash.vendor.bundle.jruby.$2_dot_5_dot_0.gems.logstash_minus_filter_minus_truncate_minus_1_dot_0_dot_4.lib.logstash.filters.truncate.truncate(/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/logstash-filter-truncate-1.0.4/lib/logstash/filters/truncate.rb:101)", "org.jruby.RubyArray.each(org/jruby/RubyArray.java:1821)", "usr.share.logstash.vendor.bundle.jruby.$2_dot_5_dot_0.gems.logstash_minus_filter_minus_truncate_minus_1_dot_0_dot_4.lib.logstash.filters.truncate.truncate(/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/logstash-filter-truncate-1.0.4/lib/logstash/filters/truncate.rb:100)", "usr.share.logstash.vendor.bundle.jruby.$2_dot_5_dot_0.gems.logstash_minus_filter_minus_truncate_minus_1_dot_0_dot_4.lib.logstash.filters.truncate.truncate(/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/logstash-filter-truncate-1.0.4/lib/logstash/filters/truncate.rb:101)", "org.jruby.RubyArray.each(org/jruby/RubyArray.java:1821)", "usr.share.logstash.vendor.bundle.jruby.$2_dot_5_dot_0.gems.logstash_minus_filter_minus_truncate_minus_1_dot_0_dot_4.lib.logstash.filters.truncate.truncate(/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/logstash-filter-truncate-1.0.4/lib/logstash/filters/truncate.rb:100)"kv in our logstash. When parsing fails for kv filter, if tag_on_failure is set it won't throw any errors. But our below configuration did some tricks.ruby {

id => "http_query-decode"

code => "event.set('[access_log][request][http][query]', event.get('[access_log][request][http][query]').transform_values{ |xs| [*xs].collect(&URI.method(:decode_www_form_component)) })"

}[access_log][request][http][query][] empty array was created.URI.decode_www_form_component('%xz')

# ArgumentError: invalid %-encoding (%xz)Our main target was to reduce the resource usage for logstash causing frequent restarts and truncate filter was only enabled in the development environment. So we just removed the `truncate` filter.

Feel free to tag on twitter if you have queries or comments.

Happy logstashing🪵